Thursday, October 26, 2023

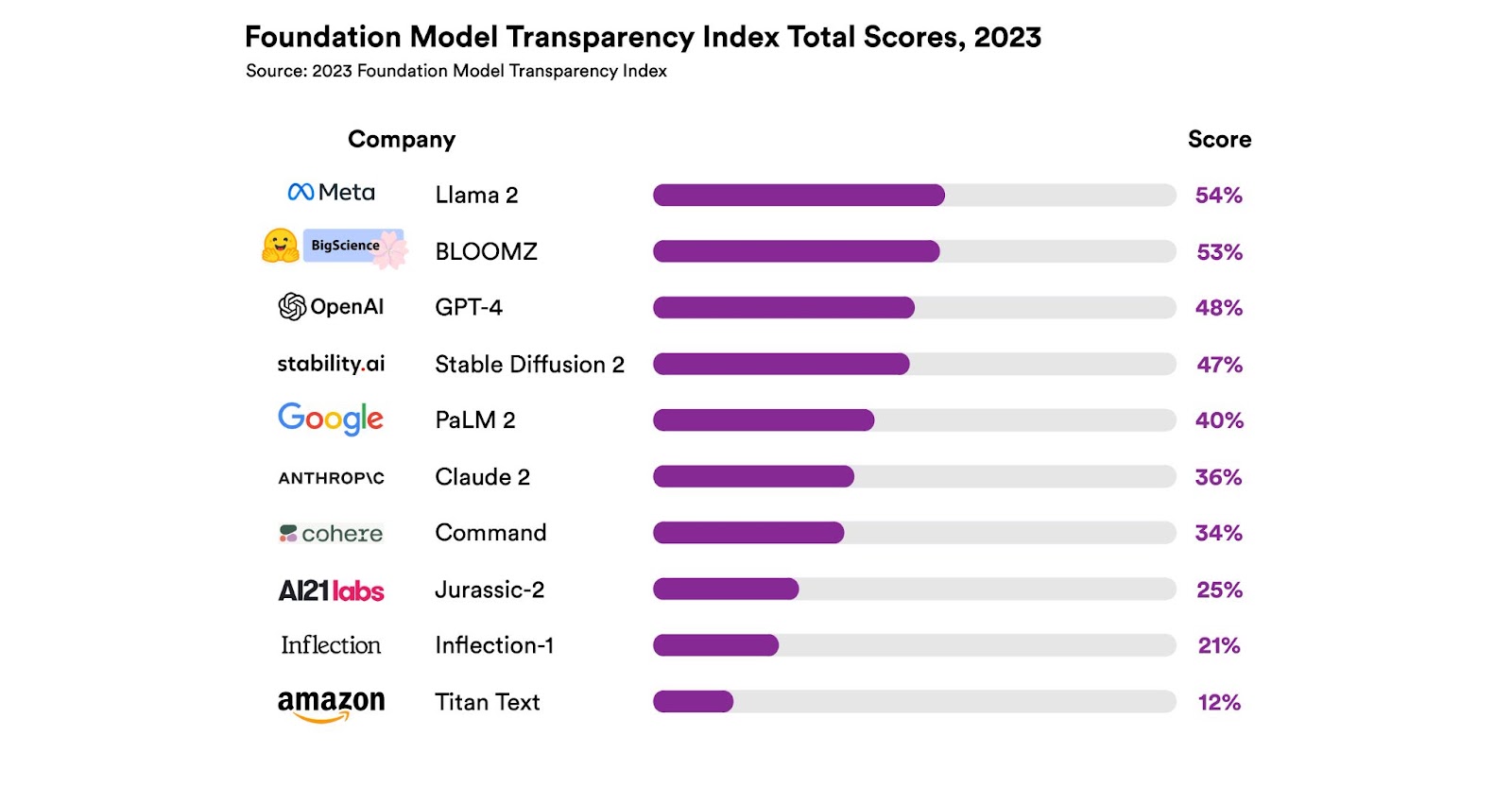

The Foundation Model (LLM) Transparency Index

Wednesday, October 25, 2023

AI already makes decisions that may affect you

Tuesday, October 24, 2023

Classic TV Debate on AI & Mind

In this old TV debate from 1984, John Searle (philosophy professor from Berkeley) and Margaret Boden (AI professor from Sussex) debate AI, intelligence, understanding and consciousness. What is remarkable is the intellectual quality of the TV debate. You'd never see a programme like this today on TV, which has been totally dumbed down. Secondly, Searle's argument, namely the Chinese Room, is still just as relevant to ChatGPT as it was to the comparatively dumb AI of the 80s. Can a computer shuffling 1s and 0s according to a program understand anything?

Tuesday, October 17, 2023

CBR and Large Language Models Report on arXiv

I've just published a report titled A Case-Based Persistent Memory for a Large Language Model on arXiv. The report explores Case-based reasoning (CBR) as a methodology for problem-solving that can use any appropriate computational technique. This report argues that CBR researchers have somewhat overlooked recent developments in deep learning and large language models (LLMs). The underlying technical developments that have enabled the recent breakthroughs in AI have strong synergies with CBR and could be used to provide a persistent memory for LLMs to make progress towards Artificial General Intelligence.

https://doi.org/10.48550/arXiv.2310.08842